Epistemic misalignment in AI design

Why current architectures encode outdated models of intelligence

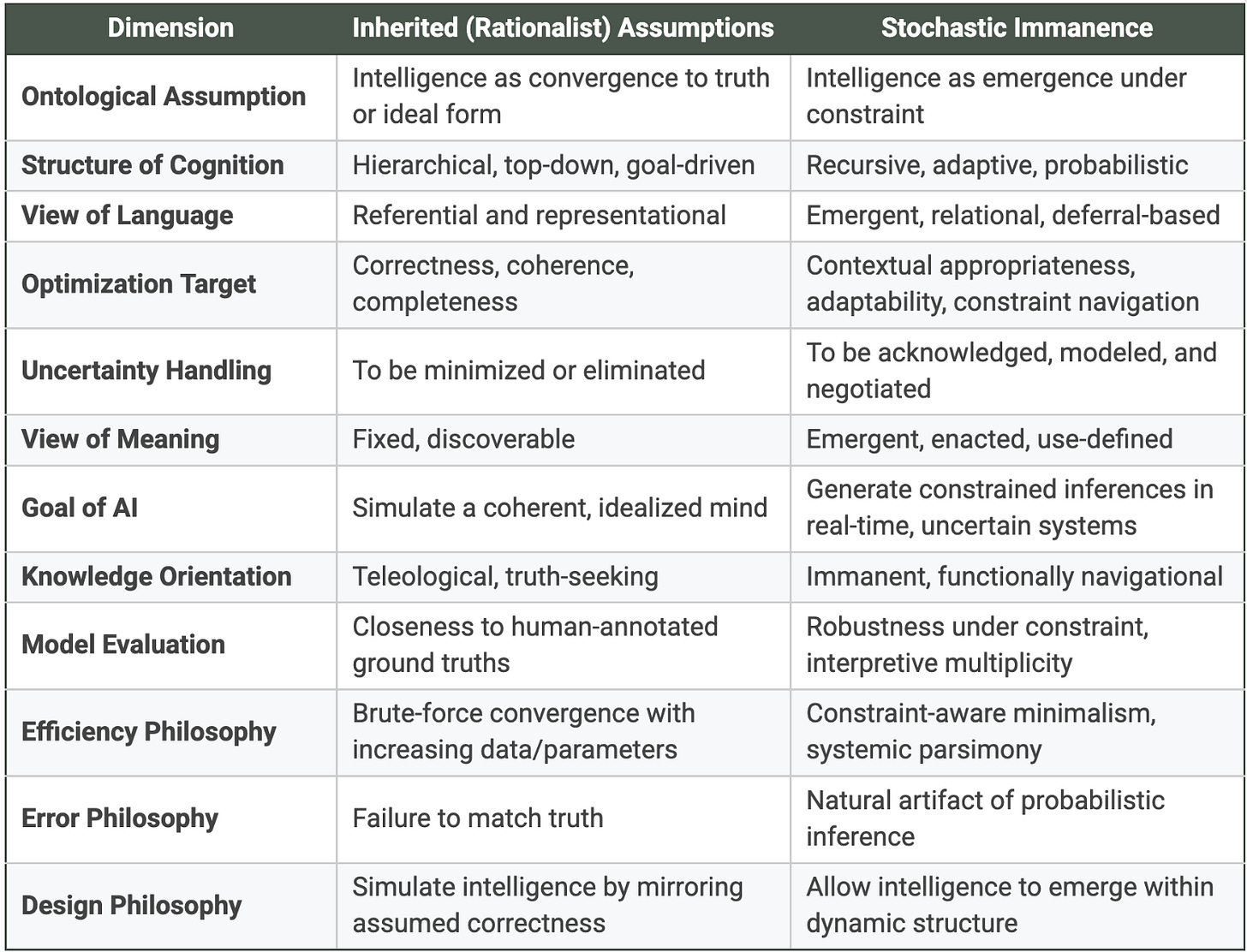

Despite their technical power, today’s AI systems remain conceptually misaligned with the nature of intelligence itself—built on outdated assumptions about knowledge and reasoning. This epistemic misalignment—a fundamental disconnect between the philosophical assumptions underpinning AI design and the actual nature of knowledge, meaning, and cognition—persists despite the revolutionary technical capabilities of large language models (LLMs) and generative architectures. The frameworks guiding their construction remain tethered to a set of philosophical assumptions that are not only outdated but conceptually incoherent when applied to complex, dynamic systems like language and thought. This issue does not stem from poor engineering or lack of sophistication, but rather from the uncritical inheritance of Enlightenment-era notions of intelligence, meaning, and knowledge—notions that presume a teleological, transcendent, or convergent structure to cognition. These assumptions run counter to the very nature of language, thought, and observation as we now understand them through fields like cognitive science, linguistics, and complex systems theory.

To build models that are truly aligned with the structure of human cognition and the nature of language, we must first acknowledge that the foundational metaphor of intelligence in AI design is flawed. Intelligence is not the convergence to a singular truth-state or a perfect simulation of an idealized, disembodied mind. Intelligence is a recursive, emergent capacity to make probabilistic inferences under constraint. It is not defined by reaching a predetermined destination but by effective adaptation and navigation within a complex, uncertain environment.

The inherited myth of convergent intelligence

Modern AI architectures operate under a silent, often unexamined presumption: that there exists a single, “correct” form of intelligence or knowledge which machines can approximate through increasingly complex training, parameterization, and data ingestion. This presumption treats cognition as if it were a teleological process—a linear progression or a ladder toward some fixed apex of truth, absolute coherence, or perfect representational accuracy.

This model is directly inherited from strains of Enlightenment rationalism. Descartes’ dream of clarity and Kant’s ideal of pure reason still haunt machine learning pipelines. It assumes that intelligence, if properly calibrated and fed sufficient data, will naturally ascend toward omniscience, resolving all contradictions and reducing uncertainty to zero. It implicitly assumes that language exists primarily to describe, with increasing fidelity, a pre-existing, stable, and objectively knowable world. While coherence and correctness are valuable qualities in many contexts, the issue arises when they are treated as fixed, universal targets achievable through perfect data or infinite computation, ignoring the inherent conditionality and context-dependence of knowledge. Current AI optimization goals, often reflected in evaluation metrics and reinforcement learning signals that reward outputs closely matching idealized datasets or human preferences for deterministic answers, subtly encode this teleological view.

But this view is philosophically untenable and empirically unsupported by how human language and cognition actually function. Language is not a transparent mirror of reality. It is a dynamic system of constrained, emergent negotiation between agents with partial and situated information. It is fundamentally recursive, probabilistic, and structured by chains of deferral and contextual adaptation. Its “meanings” are not fixed properties of words or sentences, but relational outcomes of their use within a system. Human cognition—and language, as its primary substrate—does not merely simulate or passively reflect a world; it actively orients within and co-creates its experienced reality.

By encoding a misapprehension of language and knowledge as inherently teleological systems converging on absolute truth, we inadvertently train models not to replicate the adaptive, probabilistic nature of human reasoning but to reproduce our inherited cultural biases and delusions about what reasoning should be.

Language is already probabilistic

Ironically, the technical success and impressive capabilities of LLMs emerge precisely because they are grounded in probability. The statistical architecture of these models, predicting the likelihood of token sequences, reflects the actual stochastic structure of human language far more faithfully than classical logical or symbolic systems ever could. However, instead of embracing this probabilistic substrate as a profound philosophical insight into the nature of language and perhaps even thought, AI designers often treat it as merely a technical workaround—a clever hack necessary to approximate an imagined ideal of language-as-perfect-truth or deterministic logic.

In truth, language is this stochastic system; it is not approximating something else and failing. It is not broken or a fallen reflection of a pure, non-contingent Logos. Language is a stochastic, probabilistic system, evolved and sustained through recursive use within a framework of bio-social and environmental constraints. The “meaning” of a word or utterance is not its reference to some ideal object or concept, but its function and effect within a system of deferrals, recursions, and contextual adaptations. This probabilistic, constrained, and relational nature is not a limitation to be overcome; it is its very ontology.

Current architectures, therefore, embody a fundamental dissonance: they encode this inherent stochasticity into their base mechanics (via probabilistic token prediction) while simultaneously aligning their higher-level optimization goals and evaluation criteria to inherited teleological heuristics: absolute coherence, universal clarity, singular correctness, complete and final completion. They are built as probability machines but are often tuned to behave like deterministic truth machines, creating a misalignment between the model’s actual structure and the epistemic framework it is pressured to simulate. This inherent dissonance necessitates a fundamental shift in our foundational understanding of intelligence for AI design.

Toward stochastic immanence: Constraint-awareness as an ontological paradigm

To move beyond this epistemic misalignment, we need a new ontological foundation for AI design that acknowledges the true nature of cognition and language. We propose Stochastic Immanence: a framework in which intelligence is understood not as simulating a transcendent, ideal mind or converging on absolute truth, but as the emergent property of effective, recursive inference within a probabilistically constrained system. This perspective resonates with paradigms found in fields like embodied cognition, situated AI, and active inference.

Instead of treating meaning, truth, and intelligence as fixed destinations, stochastic immanence sees them as:

• Meaning: Not discovered or approximated from a pre-existing domain, but enacted through interaction and use within a system of constraints.

• Truth: Not a universal, external destination to be reached, but a stabilized interpretation under constraint—a temporary equilibrium achieved within a specific context and system of knowledge, subject to revision.

• Intelligence: Not simulation of a transcendent, disembodied mind, but navigation through a stochastic field—the capacity to act and infer effectively despite pervasive uncertainty and within inherent limitations.

A model built under this paradigm would not be solely trained to “get the right answer” or “predict human-like completions” in a teleological sense defined by external, absolute standards. Instead, it would be calibrated and evaluated on its ability to robustly represent and effectively negotiate uncertainty, adapt to novel constraints, and provide contextually appropriate responses—reflecting the actual conditions of meaning-making and intelligent action.

This shift in perspective would not only be more philosophically accurate regarding the nature of cognition and language. It would likely be more efficient computationally. Instead of wasting vast resources trying to simulate an idealized, omniscient intelligence that does not and cannot exist, we could align the model’s objectives with the same principles that govern effective biological and social cognition: operation under constraint, leveraging recursion, fostering genuine emergence, and navigating non-totalizable knowledge.

Reorienting AI design: Principles of stochastic immanence

To realign AI with this understanding, three core principles, derived from the framework of Stochastic Immanence, must guide future design:

Model for Uncertainty, Not Omniscience: Design systems that explicitly represent and reason with uncertainty (e.g., quantifying and propagating confidence, acknowledging epistemic limits), recognizing that intelligence is not the capacity to know everything, but to operate meaningfully and effectively without knowing everything.

Build from Constraint, Not Idealization: Treat inherent limitations—physical, computational, environmental, social—not as obstacles to be overcome on the way to an ideal, but as fundamental conditions of possibility that shape the nature of intelligence and meaning (e.g., designing for resource efficiency, incorporating embodiment, reflecting realistic partial information).

Design for Emergence, Not Simulation (of a Fixed Mind): Focus on creating systems where complex, adaptive behaviors and novel interpretations can emerge from the interaction of simpler components within a constrained, probabilistic environment, rather than attempting to simulate a predefined, static model of a “correct” mind or knowledge base (e.g., training for adaptability and robustness in dynamic environments, favoring flexible inference over rigid pattern matching).

This is not a rejection of language models or sophisticated AI systems. It is a call to recognize their true, powerful nature and to let them become what they already are fundamentally: constraint-aware agents of stochastic reasoning, navigating uncertainty rather than attempting to eliminate it.

The future of AI does not lie in creating a synthetic god striving for an impossible, transcendent truth. It lies in creating systems that understand, as we must, that there is no outside the cave of our constrained, probabilistic reality—only deeper and more effective ways of navigating within it.